Artur Bergman, founder of a CDN exclusively powered by super fast SSDs, has made many compelling cases over the years to use them. He was definitely ahead of the curve here, but he’s right. Nowadays, they’re denser, 100x faster and as competitively priced as hard disks in most server configurations.

At Etsy, we’ve been trying to get on this bandwagon for the last 5 years too. It’s got a lot better value for money in the last year, so we’ve gone from “dipping our toes in the water” to “ORDER EVERYTHING WITH SSDs!” pretty rapidly.

This isn’t a post about how great SSDs are though: Seriously, they’re amazing. The new Dell R630 allows for 24x 960GB 1.8″ SSDs in a 1U chassis. That’s 19TB usable ludicrously fast, sub millisecond latency storage after RAID6, that will blow away anything you can get on spinning rust, use less power, and is actually reasonably priced per GB.

So if this post isn’t “GO BUY ALL THE SSDs NOW”, what is it? Well, it’s a cautionary tale that it’s not all unicorns and IOPs.

The problem(s) with SSDs

When SSDs first started to come out, people were concerned that these drives “only” handled a certain number of operations or data during their lifetime, and they’d be changing SSDs far more frequently than conventional spinning rust. Actually, that’s totally not the case and we haven’t experienced that at all. We have thousands of SSDs, and we’ve lost maybe one or two to old age, and it probably wasn’t wear related.

Spoiler alert: SSD firmware is buggy

When was the last time your hard disk failed because the firmware did something whacky? Well, Seagate had a pretty famous case back in 2009 where the drives may not ever power on again if you power them off. Whoops.

But the majority of times, the issue is the physical hardware… The infamous “spinning rust” that is in the drive.

So, SSDs solve this forever right? No moving parts.. Measured mean time to failure of hundreds of years before the memory wears out? Perfect!

Here’s the run down of the firmware issues we’ve had over 5 or so years:

Intel

Okay, bad start, we’ve actually had no issues with Intel. This seems to be common across other companies we’ve spoken to. We started putting single 160GB in our web servers about 4 years ago, because it gave us low power, fast, reliable storage and the space requirements for web servers and utility boxes was low anyway. No more waiting for the metal to seize up! We have SSDs that have long outlived the servers.

OCZ

Outside of the 160GB Intel drives, our search (Solr) stack was the first to benefit from denser, fast storage. Search indexes were getting big; too big for memory. In addition, getting them off disk and serving search results to users was limited by the random disk latency.

Rather than many expensive, relatively fast but low capacity spinning rust drives in a RAID array, we opted for OCZ Talos 960GB disks. These weren’t too bad; we had a spate of initial failures in what seemed like a bad batch, but we were able to learn from this and make the app more resilient to failures.

However, they had poor SMART info (none) so predicting failures was hard.

Unfortunately, the company later went bankrupt, and Toshiba rescued them from the dead. They were unavailable for long enough that we simply ditched them and moved on.

HP SSDs

We briefly tried running third party SSDs on our older (HP) Graphite boxes… This was a quick, fairly cheap win as it got us a tonne of performance for relatively little money (back then we needed much less Graphite storage). This worked fine until the drives started to fail.

Unfortunately, HP have proprietary RAID controllers, and they don’t support SMART. Or rather, they refuse to talk to non-HP drives using off the shelf technology, they have their own methods.

Slot an unsupported disk or SSD into the controller, and you have no idea how that drive is performing or failing. We quickly learnt this after running for a while on these boxes, and performance randomly tanked. The SSDs underlying the RAID array seemed to be dying and slowing down, and we had no way of knowing which one (or ones), or how to fix it. Presumably the drives were not being issued TRIM commands either.

When we had to purchase a new box for our primary database this left us with no choice: We have to pay HP for SSDs. 960GB SSDs direct from HP, properly supported, cost us around $7000 each. Yes, each. We had to buy 4 of them to get the storage we needed.

On the upside, they do have fancy detailed stats (like wear levelling) exposed via the controller and ILO, and none have failed yet almost 3 years on (in fact, they’re all showing 99% health). You get what you pay for, luckily.

Samsung

Samsung saved the day and picked up from OCZ with a ludicrously cheap 960GB offering, the 840 EVO. A consumer drive, so very limited warranty, but for the price (~$400-500) you got great IOPS and they were reliable. They had better SMART info, and seemed to play nicely with our hardware.

We have a lot of these drives:

[~/chef-repo (master)] $ knife search node block_device_sda_model:'Samsung' -a block_device.sda.model 117 items found

That’s 117 hosts with those drives, most of them have 6 each, and doesn’t include hosts that have them behind RAID controllers (for example, our Graphite boxes). In particular, they’ve been awesome for our ELK logging cluster

Then BB6Q happened…

I hinted that we used these for Graphite. They worked great! Who wouldn’t want thousands and thousands of IOPs for relatively little money? Buying SSDs from OEMs is still expensive, and they give you those darn fancy “enterprise” level drives. Pfft. Redundancy at the app level, right?

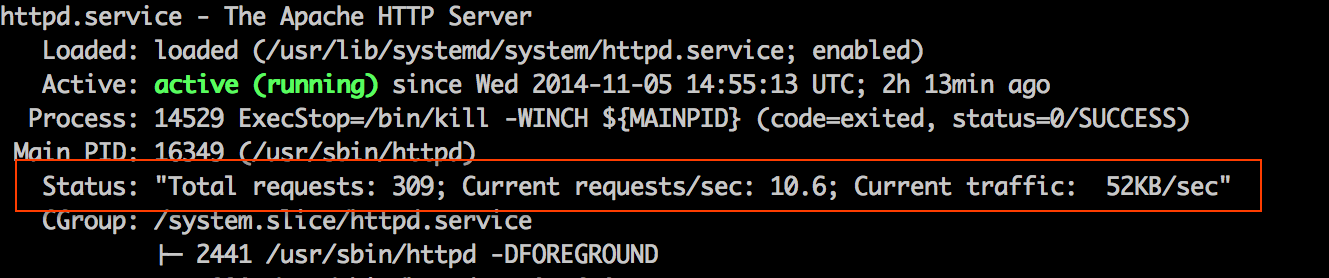

We had started buying Dell, who use a rebranded LSI RAID controller so they happily talked to the drives including providing full SMART info. We had 16 of those Samsung drives behind the Dell controller giving us 7.3TB of super fast storage.

Given the already proven pattern, we ordered the same spec box for a Ganglia hardware refresh. And they didn’t work. The RAID controller hung on startup trying to initialise the drives, so long that the Boot ROM was never loaded so it was impossible to boot from an array created using them.

What had changed?! A quick

"MegaCli -AdpAllInfo -a0 | diff"

on the two boxes, revealed: The firmware on the drive had changed. (shout out to those of you who know the MegaCli parameters by heart now…)

Weeks of debugging and back and forth with both Dell (who were very nice given these drives were unsupported) and Samsung revealed there were definitely firmware issues with this particular BB6Q release.

It was soon released publicly, that not only did this new firmware somehow break compatibility with Dell RAID controllers (by accident), but they also had a crippling performance bug… They got slower and slower over time, because they had messed up their block allocation algorithm.

In the end, behind LSI controllers, it was the controller sending particular ATA commands to the drives that would make them hang and not respond.. And so the RAID controller would have to wait for it to time out.

Samsung put out a firmware updater and “fixer” tool for this, but it needed to move your data around so only ran on Windows with NTFS.

With hundreds of these things that are in production and working, but have a crippling performance issue, we had to figure out how they would get flashed. An awesome contractor for Samsung agreed that if we drove over batches of drives (luckily, they are incredibly close to our datacenter) they would flash them and return them the next day.

This story has a relatively happy ending then; our drives are getting fixed, and we’re still buying their drives; now the 960GB 850 PRO model, as they remain a great value for money high performance drive.

Talking with other companies, we’re not alone with Samsung issues like this, even the 840 PRO has some issues that require hard power cycles to fix. But the price is hard to beat, especially now the 850 range is looking more solid.

LiteOn

LiteOn were famously known for making CD writers back when CD writers were new and exciting.

But they’re also a chosen OEM partner of Dell’s for their official “value” SSDs. Value is a relative term here, but they’re infinitely cheaper than HP’s offerings, enterprise level, fully supported and for all that, “only” twice the price of Samsung (~$940)

We decided to buy new SSD based database boxes, because SSDs were too hard to resist for these use cases; crazy performance and at 1TB capacity, not too much more expensive per GB than spinning rust. We had to buy many many 15,000rpm drives to even get near the performance, and they were expensive at 300GB capacity. We could spend a bit more money and save power, rack space, and get more disk space and IOPs.

For similar reasons to HP, we thought best to pay the premium for a fully supported solution, especially as Samsung had just caused all these issue with their firmware issues.

With that in mind, we ordered some R630’s hot off the production line with 960GB LiteOn’s, tested performance, and it was great: 30,000 random write IOPs across 4 SSDs in RAID6, (5.5 TB useable space).

We put them live, and they promptly blew up spectacularly. (Yes, we had a postmortem about this). The RAID controller claimed that two drives had died simultaneously, with another being reset by the adapter. Did we really get two disks to die at once?

This took months of working closely with Dell to figure out. Replacement of drives, backplane, and then the whole box, but the problem persisted. Just a few short hours of intense IO, especially on a box with only 4 SSDs would cause them to flip out. And in the mean time, we’d ordered 50+ of these boxes with varying amounts of SSDs installed, having tested so well initially.

Eventually it transpires that, like most good problems, it was a combination of many factors that caused these issues. The SSDs were having extended garbage collection periods, exacerbated by a smaller amount of SSDs with higher IO, in RAID6. This caused the controller to kick the drive out of the array… and unfortunately due to the write levelling across the drives, at least two of them were garbage collecting at the same time, destroying the array integrity.

The fix was no small deal; Dell and LiteOn together identified and fixed weaknesses in their RAID controller, the backplane and the SSD firmware. It was great to see the companies working together rather than just pointing fingers here, and the fixes for all sizes except 960GB was out within a month.

The story here continues for us though; the 960GB drive remains unsolved, as it caused more issues, and we had almost exclusively purchased those. For systems that weren’t fully loaded, Dell kindly provided us with 800GB replacements and extra drives to make up the space. For the rest, because the stress across the 22 drives means garbage collection isn’t as intense, so they remain operating until a firmware fix.

Summary

I’m hesitant to recommend any one particular brand, because I’m sure as with the hard disk phenomenon (Law where each person has their preferred brand that they’ve never had issues with but everyone else has), people’s experiences will have varied.

We should probably collect some real data on this as an industry and share it around; I’ve always been of the mindset that we’re weirdly secretive sometimes of what hardware/software we use but we should share, so if anyone wants to contribute let me know.

But: you can probably continue to buy Intel and Samsung, depending on your use case/budget, and as usual, own your own availability and add resiliency to your apps and hardware, because things always fail in ways you can’t imagine.